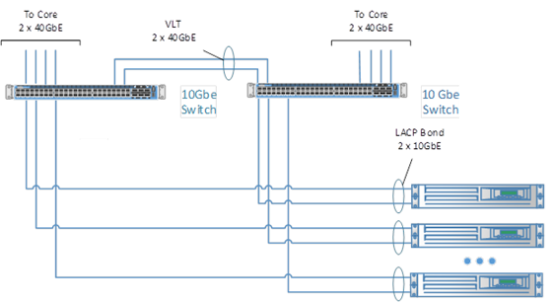

For an economy of scale or to provide a longer switch lifetime, you might decide to use 25 GbE switches or 100 GbE switches to provision the network infrastructure. Dell EMC engineers chose base-line switches for the Ready Architecture design to handle anticipated maximum data flow rates over a 25 GbE connection. Once configured, you can see we have no problem pinging the I/O Aggregator.Data plane networking switches carry all OpenShift cluster ingress/egress traffic, all internal traffic, and all storage traffic. The only configuration that is required at this point is on the S4810 ToR switch as shown below. Typically, if any minimal configuration is needed on the I/O Aggregator, it would be done via the Chassis Management Controller (CMC) web interface which even a server admin could have access to. It is important to note that since the uplink connectivity is automatically bundled into one port-channel, no spanning tree protocol (STP) is required or used.

Additional ports can be added via the expansion slots to provide additional bandwidth. The I/O Aggregator simply puts all eight 10 GbE uplink ports in one LACP port-channel (port-channel 128). I do not need to do any configuration on the I/O Aggregator blade switch I put an IP address on VLAN 1 via the CLI for testing purposes only. Note, all I have done is connect the 40 GbE to 4 x 10 GbE Twinax breakout cables from the I/O Aggregator blade switch to the S4810 switch. The goal of this blog is to demonstrate how easy it is to use Dell’s I/O Aggregatorĭell PowerEdge I/O Aggregator connected to Dell Force10 S4810 In this blog I’ll focus mostly on the Dell PowerEdge M I/O Aggregator and leave the detailed discussion of the Dell M1000e chassis for another blog. It’s important to note that these modules can be used together in any combination except (2) 4 x 10Gbase-T modules. The currently available modules include a 2 x 40 GbE QSFP port module in 4 x 10 GbE mode, a 4 x 10 GbE SFP+ module, and a 4 x 10Gbase-T module. It also has two Flex I/O expansion slots for additional modules. The I/O Aggregator is a great solution for those looking for a low-cost M1000e advanced L2 blade switch with hassle-free configuration and expandable upstream connectivity for Dell blade servers. This is pretty cool, not only in terms of functionality, but also in terms of the consolidation and the mess of Ethernet/power cables avoided by not using standalone components. If quarter-height blade servers are used, the M1000e can support up to 32 servers.

#DELL FX2 NETWORK CABLING STACKED TOR SWITCH INSTALL#

The Dell PowerEdge M I/O Aggregator also provide 32 internal 10 GbE connections for Dell blade servers you can install in the Dell PowerEdge M1000e chassis. If desired, these ports can also be used as 40 GbE stacking ports. The base blade comes with 2 x 40 GbE ports that by default are configured as 8 x 10 GbE ports. Think of it as an advanced layer 2 switch that provides expandable uplink connectivity.

The Dell PowerEdge M I//O Aggregator is a slick blade switch that plugs into the Dell PowerEdge M1000e chassis and requires barely any configuration/networking knowledge.

0 kommentar(er)

0 kommentar(er)